In August this year, Django 3.1 arrived with support for Django async views. This was fantastic news but most people raised the obvious question – What can I do with it? There have been a few tutorials about Django asynchronous views that demonstrate asynchronous execution while calling asyncio.sleep. But that merely led to the refinement of the popular question – What can I do with it besides sleep-ing?

The short answer is – it is a very powerful technique to write efficient views. For a detailed overview of what asynchronous views are and how they can be used, keep on reading. If you are new to asynchronous support in Django and like to know more background, read my earlier article: A Guide to ASGI in Django 3.0 and its Performance.

Django Async Views

Django now allows you to write views which can run asynchronously. First let’s refresh your memory by looking at a simple and minimal synchronous view in Django:

def index(request):

return HttpResponse("Made a pretty page")

It takes a request object and returns a response object. In a real world project, a view does many things like fetching records from a database, calling a service or rendering a template. But they work synchronously or one after the other.

In Django’s MTV (Model Template View) architecture, Views are disproportionately more powerful than others (I find it comparable to a controller in MVC architecture though these things are debatable). Once you enter a view you can perform almost any logic necessary to create a response. This is why Asynchronous Views are so important. It lets you do more things concurrently.

It is quite easy to write an asynchronous view. For example the asynchronous version of our minimal example above would be:

async def index_async(request):

return HttpResponse("Made a pretty page asynchronously.")

This is a coroutine rather than a function. You cannot call it directly. An event loop needs to be created to execute it. But you do not have to worry about that difference since Django takes care of all that.

Note that this particular view is not invoking anything asynchronously. If Django is running in the classic WSGI mode, then a new event loop is created (automatically) to run this coroutine. So in this case, it might be slightly slower than the synchronous version. But that’s because you are not using it to run tasks concurrently.

So then why bother writing asynchronous views? The limitations of synchronous views become apparent only at a certain scale. When it comes to large scale web applications probably nothing beats FaceBook.

Views at Facebook

In August, Facebook released a static analysis tool to detect and prevent security issues in Python. But what caught my eye was how the views were written in the examples they had shared. They were all async!

# views/user.py

async def get_profile(request: HttpRequest) -> HttpResponse:

profile = load_profile(request.GET['user_id'])

...

# controller/user.py

async def load_profile(user_id: str):

user = load_user(user_id) # Loads a user safely; no SQL injection

pictures = load_pictures(user.id)

...

# model/media.py

async def load_pictures(user_id: str):

query = f"""

SELECT *

FROM pictures

WHERE user_id = {user_id}

"""

result = run_query(query)

...

# model/shared.py

async def run_query(query: str):

connection = create_sql_connection()

result = await connection.execute(query)

...

Note that this is not Django but something similar. Currently, Django runs the database code synchronously. But that may change sometime in the future.

If you think about it, it makes perfect sense. Synchronous code can be blocked while waiting for an I/O operation for several microseconds. However, its equivalent asynchronous code would not be tied up and can work on other tasks. Therefore it can handle more requests with lower latencies. More requests gives Facebook (or any other large site) the ability to handle more users on the same infrastructure.

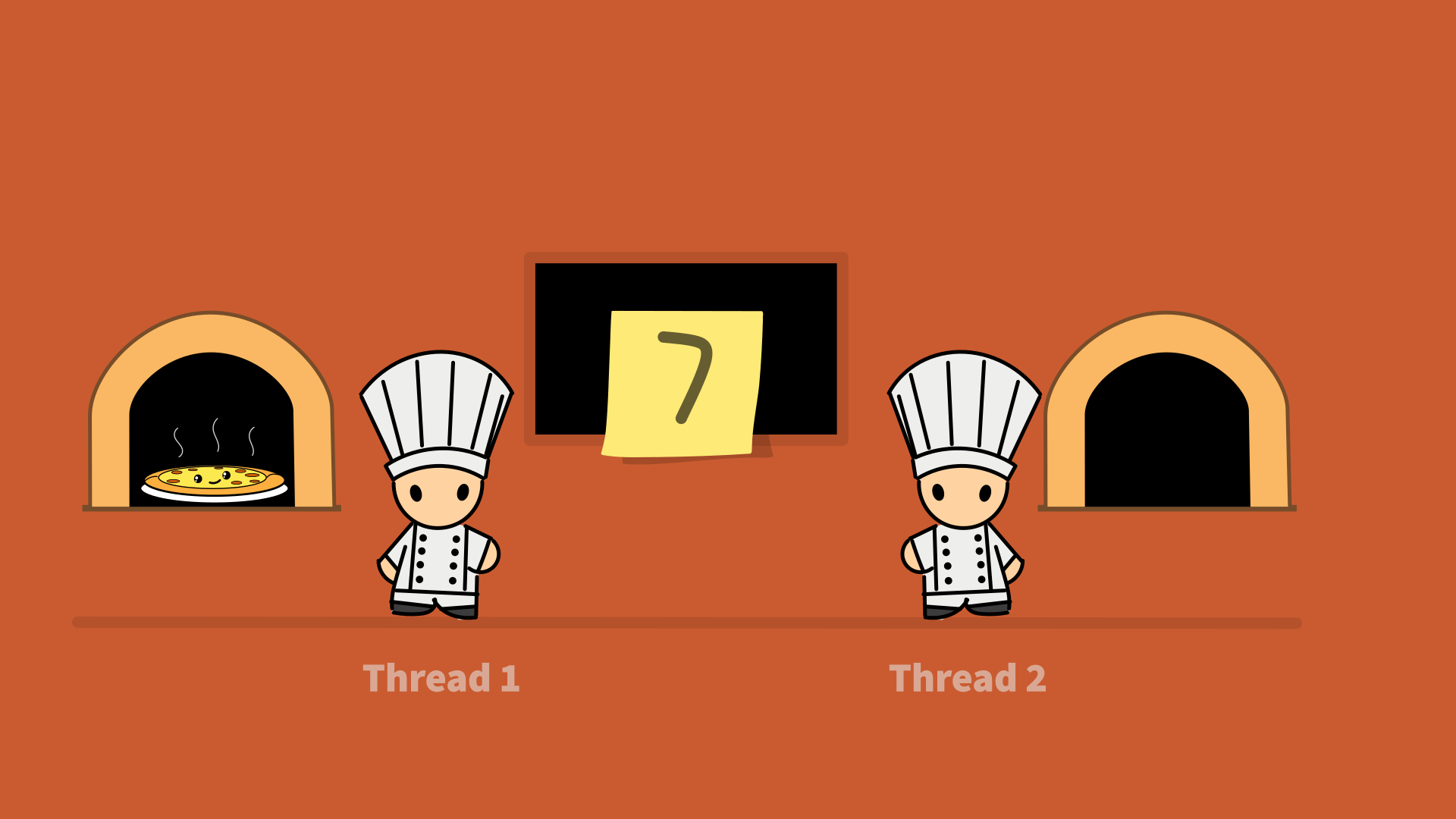

Even if you are not close to reaching Facebook scale, you could use Python’s asyncio as a more predictable threading mechanism to run many things concurrently. A thread scheduler could interrupt in between destructive updates of shared resources leading to difficult to debug race conditions. Compared to threads, coroutines can achieve a higher level of concurrency with very less overhead.

Misleading Sleep Examples

As I joked earlier, most of the Django async views tutorials show an example involving sleep. Even the official Django release notes had this example:

async def my_view(request):

await asyncio.sleep(0.5)

return HttpResponse('Hello, async world!')

To a Python async guru this code might indicate the possibilities that were not previously possible. But to the vast majority, this code is misleading in many ways.

Firstly, the sleep happening synchronously or asynchronously makes no difference to the end user. The poor chap who just opened the URL linked to that view will have to wait for 0.5 seconds before it returns a cheeky “Hello, async world!”. If you are a complete novice, you may have expected an immediate reply and somehow the “hello” greeting to appear asynchronously half a second later. Of course, that sounds silly but then what is this example trying to do compared to a synchronous time.sleep() inside a view?

The answer is, as with most things in the asyncio world, in the event loop. If the event loop had some other task waiting to be run then that half second window would give it an opportunity to run that. Note that it may take longer than that window to complete. Cooperative Multithreading assumes that everyone works quickly and hands over the control promptly back to the event loop.

Secondly, it does not seem to accomplish anything useful. Some command-line interfaces use sleep to give enough time for users to read a message before disappearing. But it is the opposite for web applications - a faster response from the web server is the key to a better user experience. So by slowing the response what are we trying to demonstrate in such examples?

The best explanation for such simplified examples I can give is convenience. It needs a bit more setup to show examples which really need asynchronous support. That’s what we are trying to explore here.

Better examples

A rule of thumb to remember before writing an asynchronous view is to check if it is I/O bound or CPU-bound. A view which spends most of the time in a CPU-bound activity for e.g. matrix multiplication or image manipulation would really not benefit from rewriting them to async views. You should be focussing on the I/O bound activities.

Invoking Microservices

Most large web applications are moving away from a monolithic architecture to one composed of many microservices. Rendering a view might require the results of many internal or external services.

In our example, an ecommerce site for books renders its front page - like most popular sites - tailored to the logged in user by displaying recommended books. The recommendation engine is typically implemented as a separate microservice that makes recommendations based on past buying history and perhaps a bit of machine learning by understanding how successful its past recommendations were.

In this case, we also need the results of another microservice that decides which promotional banners to display as a rotating banner or slideshow to the user. These banners are not tailored to the logged in user but change depending on the items currently on sale (active promotional campaign) or date.

Let’s look at how a synchronous version of such a page might look like:

def sync_home(request):

"""Display homepage by calling two services synchronously"""

context = {}

try:

response = httpx.get(PROMO_SERVICE_URL)

if response.status_code == httpx.codes.OK:

context["promo"] = response.json()

response = httpx.get(RECCO_SERVICE_URL)

if response.status_code == httpx.codes.OK:

context["recco"] = response.json()

except httpx.RequestError as exc:

print(f"An error occurred while requesting {exc.request.url!r}.")

return render(request, "index.html", context)

Here instead of the popular Python requests library we are using the httpx library because it supports making synchronous and asynchronous web requests. The interface is almost identical.

The problem with this view is that the time taken to invoke these services add up since they happen sequentially. The Python process is suspended until the first service responds which could take a long time in a worst case scenario.

Let’s try to run them concurrently using a simplistic (and ineffective) await call:

async def async_home_inefficient(request):

"""Display homepage by calling two awaitables synchronously (does NOT run concurrently)"""

context = {}

try:

async with httpx.AsyncClient() as client:

response = await client.get(PROMO_SERVICE_URL)

if response.status_code == httpx.codes.OK:

context["promo"] = response.json()

response = await client.get(RECCO_SERVICE_URL)

if response.status_code == httpx.codes.OK:

context["recco"] = response.json()

except httpx.RequestError as exc:

print(f"An error occurred while requesting {exc.request.url!r}.")

return render(request, "index.html", context)

Notice that the view has changed from a function to a coroutine (due to async def keyword). Also note that there are two places where we await for a response from each of the services. You don’t have to try to understand every line here, as we will explain with a better example.

Interestingly, this view does not work concurrently and takes the same amount of time as the synchronous view. If you are familiar with asynchronous programming, you might have guessed that simply awaiting a coroutine does not make it run other things concurrently, you will just yield control back to the event loop. The view still gets suspended.

Let’s look at a proper way to run things concurrently:

async def async_home(request):

"""Display homepage by calling two services asynchronously (proper concurrency)"""

context = {}

try:

async with httpx.AsyncClient() as client:

response_p, response_r = await asyncio.gather(

client.get(PROMO_SERVICE_URL), client.get(RECCO_SERVICE_URL)

)

if response_p.status_code == httpx.codes.OK:

context["promo"] = response_p.json()

if response_r.status_code == httpx.codes.OK:

context["recco"] = response_r.json()

except httpx.RequestError as exc:

print(f"An error occurred while requesting {exc.request.url!r}.")

return render(request, "index.html", context)

If the two services we are calling have similar response times, then this view should complete in _half _the time compared to the synchronous version. This is because the calls happen concurrently as we would want.

Let’s try to understand what is happening here. There is an outer try…except block to catch request errors while making either of the HTTP calls. Then there is an inner async…with block which gives a context having the client object.

The most important line is one with the asyncio.gather call taking the coroutines created by the two client.get calls. The gather call will execute them concurrently and return only when both of them are completed. The result would be a tuple of responses which we will unpack into two variables response_p and response_r. If there were no errors, these responses are populated in the context sent for template rendering.

Microservices are typically internal to the organization hence the response times are low and less variable. Yet, it is never a good idea to rely solely on synchronous calls for communicating between microservices. As the dependencies between services increases, it creates long chains of request and response calls. Such chains can slow down services.

Why Live Scraping is Bad

We need to address web scraping because so many asyncio examples use them. I am referring to cases where multiple external websites or pages within a website are concurrently fetched and scraped for information like live stock market (or bitcoin) prices. The implementation would be very similar to what we saw in the Microservices example.

But this is very risky since a view should return a response to the user as quickly as possible. So trying to fetch external sites which have variable response times or throttling mechanisms could be a poor user experience or even worse a browser timeout. Since microservice calls are typically internal, response times can be controlled with proper SLAs.

Ideally, scraping should be done in a separate process scheduled to run periodically (using celery or rq). The view should simply pick up the scraped values and present them to the users.

Serving Files

Django addresses the problem of serving files by trying hard not to do it itself. This makes sense from a “Do not reinvent the wheel” perspective. After all, there are several better solutions to serve static files like nginx.

But often we need to serve files with dynamic content. Files often reside in a (slower) disk-based storage (we now have much faster SSDs). While this file operation is quite easy to accomplish with Python, it could be expensive in terms of performance for large files. Regardless of the file’s size, this is a potentially blocking I/O operation that could potentially be used for running another task concurrently.

Imagine we need to serve a PDF certificate in a Django view. However the date and time of downloading the certificate needs to be stored in the metadata of the PDF file, for some reason (possibly for identification and validation).

We will use the aiofiles library here for asynchronous file I/O. The API is almost the same as the familiar Python’s built-in file API. Here is how the asynchronous view could be written:

async def serve_certificate(request):

timestamp = datetime.datetime.now().isoformat()

response = HttpResponse(content_type="application/pdf")

response["Content-Disposition"] = "attachment; filename=certificate.pdf"

async with aiofiles.open("homepage/pdfs/certificate-template.pdf", mode="rb") as f:

contents = await f.read()

response.write(contents.replace(b"%timestamp%", bytes(timestamp, "utf-8")))

return response

This example illustrates why we need asynchronous template rendering in Django. But until that gets implemented, you could use aiofiles library to pull local files without skipping a beat.

There are downsides to directly using local files instead of Django’s staticfiles. In the future, when you migrate to a different storage space like Amazon S3, make sure you adapt your code accordingly.

Handling Uploads

On the flip side, uploading a file is also a potentially long, blocking operation. For security and organizational reasons, Django stores all uploaded content into a separate ‘media’ directory.

If you have a form that allows uploading a file, then we need to anticipate that some pesky user would upload an impossibly large one. Thankfully Django passes the file to the view as chunks of a certain size. Combined with aiofile’s ability to write a file asynchronously, we could support highly concurrent uploads.

async def handle_uploaded_file(f):

async with aiofiles.open(f"uploads/{f.name}", "wb+") as destination:

for chunk in f.chunks():

await destination.write(chunk)

async def async_uploader(request):

if request.method == "POST":

form = UploadFileForm(request.POST, request.FILES)

if form.is_valid():

await handle_uploaded_file(request.FILES["file"])

return HttpResponseRedirect("/")

else:

form = UploadFileForm()

return render(request, "upload.html", {"form": form})

Again this is circumventing Django’s default file upload mechanism, so you need to be careful about the security implications.

Where To Use

Django Async project has full backward compatibility as one of its main goals. So you can continue to use your old synchronous views without rewriting them into async. Asynchronous views are not a panacea for all performance issues, so most projects will still continue to use synchronous code since they are quite straightforward to reason about.

In fact, you can use both async and sync views in the same project. Django will take care of calling the view in the appropriate manner. However, if you are using async views it is recommended to deploy the application on ASGI servers.

This gives you the flexibility to try asynchronous views gradually especially for I/O intensive work. You need to be careful to pick only async libraries or mix them with sync carefully (use the async_to_sync and sync_to_async adaptors).

Hopefully this writeup gave you some ideas.

Thanks to Chillar Anand and Ritesh Agrawal for reviewing this post. All illustrations courtesy of Old Book Illustrations