In Dec 2019, we saw the release of Django 3.0 with an interesting new feature — support for ASGI servers. I was intrigued by what this meant. When I checked the performance benchmarks of asynchronous Python web frameworks they were ridiculously faster than their synchronous counterparts often by a factor of 3x–5x.

So I set out to test Django 3.0 performance with a very simple Docker setup. The results — though not spectacular — were still impressive. But before that, you might need a little bit of background about ASGI.

Before ASGI there was WSGI

It was 2003 and various Python web frameworks like Zope, Quixote used to ship with their own web servers or had their own home grown interfaces to talk to popular web servers like Apache.

Being a Python web developer meant a devout commitment to learning an entire stack but relearning everything if you needed another framework. As you can imagine this led to fragmentation. A PEP 333 - “Python Web Server Gateway Interface v1.0” tried to solve this problem by defining a simple standard interface called WSGI (Web Server Gateway Interface). Its brilliance was in its simplicity.

In fact the entire WSGI specification can be simplified (conveniently leaving out some hairy details) as the server side invoking a callable object (i.e. anything from a Python function to a class with a call method) provided by the framework or the application. If you have a component that can play both roles, then you have created a “middleware” or an intermediate layer in this pipeline. Thus, WSGI components can be easily chained together to handle requests.

WSGI became so popular that it was adopted not just by the large web frameworks like Django and Pylons but also by microframeworks like Bottle. Your favourite framework could be plugged into any WSGI-compatible application server and it would work flawlessly. It was so easy and intuitive that there was really no excuse not to use it.

Road ‘Blocks’ to Scale

So if we were perfectly fine with WSGI, why did we have to come up with ASGI? The answer will be quite evident if you have followed the path of a webrequest. Check out my animation of how a webrequest flows into Django. Notice how the framework is waiting after querying the database before sending the response. This is the drawback of synchronous processing.

Frankly this drawback was not obvious or pressing until Node.js came into the scene in 2009. Ryan Dahl, the creator of Node.js, was bothered by the C10K problem i.e. why popular web servers like Apache cannot handle 10,000 or more concurrent connections (given a typical web server hardware it would run out of memory) . He asked “What is the software doing while it queries the database?”.

The answer was, of course, nothing. It was waiting for the database to respond. Ryan argued that webservers should not be waiting on I/O activities at all. Instead it should switch to serving other requests and get notified when the slow activity is completed. Using this technique, Node.js could serve many orders of magnitude more users using less memory and on a single thread!

It was becoming increasingly clear that asynchronous event-based architectures are the right way to solve many kinds of concurrency problems. Probably that is why Python’s creator Guido himself worked towards a language level support with the Tulip project, which later became the asyncio module. Eventually Python 3.7 added the new keywords async and await to support asynchronous event loops. This has pretty significant consequences in not just how Python code is written but executed as well.

Two Worlds of Python

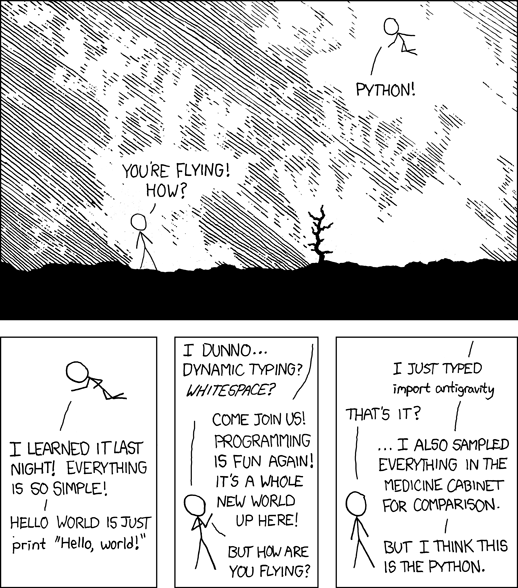

Though writing asynchronous code in Python might seem as easy as sliding an async keyword in front of a function definition, you have to be very careful not to break an important rule - Do not freely mix synchronous and asynchronous code.

This is because synchronous code can block an event loop in asynchronous code. Such situations can bring your application to a standstill. As Andrew Goodwin writes this splits your code into two worlds - “Synchronous” and “Asynchronous” with different libraries and calling styles.

Coming back to WSGI, this means we cannot simply write an asynchronous callable and plug it in. WSGI was written for a synchronous world. We will need a new mechanism to invoke asynchronous code. But if everyone writes their own mechanisms we would be back to the incompatibility hell we started with. So we need a new standard similar to WSGI for asynchronous code. Hence, ASGI was born.

ASGI had some other goals as well. But before that let’s look at two similar web applications greeting “Hello World” in WSGI and ASGI style.

In WSGI:

def application(environ, start_response):

start_response("200 OK", [("Content-Type", "text/plain")])

return b"Hello, World"

In ASGI:

async def application(scope, receive, send):

await send({"type": "http.response.start", "status": 200, "headers": [(b"Content-Type", "text/plain")]})

await send({"type": "http.response.body", "body": b"Hello World"})

Notice the change in the arguments passed into the callables. The scope argument is similar to the earlier environ argument. The send argument corresponds to start_response. But the receive argument is new. It allows clients to nonchalantly slip messages to the server in protocols like WebSockets that allow bidirectional communications.

Like WSGI, the ASGI callables can be chained one after the other to handle web requests (as well as other protocol requests). In fact, ASGI is a superset of WSGI and can call WSGI callables. ASGI also has support for long polling, slow streaming and other exciting response types without side-loading resulting in faster responses.

Thus, ASGI introduces new ways to build asynchronous web interfaces and handle bi-directional protocols. Neither the client or server needs to wait for each other to communicate - it can happen any time asynchronously. Existing WSGI-based web frameworks being written in synchronous code would not support this event-driven way of working.

Django Evolves

This also brings us to the crux of the problem with bringing all the async goodness to Django - all of Django was written in synchronous style code. If we need to write any asynchronous code then there needs to be a clone of the entire Django framework written in asynchronous style. In other words, create two worlds of Django.

Well, don’t panic — we might not have to write an entire clone as there are clever ways to reuse bits of code between the two worlds. But as Andrew Godwin who leads Django’s Async Project rightly remarks “it’s one of the biggest overhauls of Django in its history”. An ambitious project involving reimplementation of components like ORM, request handler, Template renderer etc in asynchronous style. This will be done in phases and in several releases. Here is how Andrew envisions it (not to be taken as a committed schedule):

- Django 3.0 - ASGI Server

- Django 3.1 - Async Views (see an example below)

- Django 3.2/4.0 - Async ORM

You might be thinking what about the rest of the components like Template rendering, Forms, Cache etc. They may still remain synchronous or an asynchronous implementation be fitted somewhere in the future roadmap. But the above are the key milestones in evolving Django to work in an asynchronous world.

That brings us to the first phase.

Django talks ASGI

In 3.0, Django can work in a “async outside, sync inside” mode. This allows it to talk to all known ASGI servers such as:

- Daphne - an ASGI reference server, written in Twisted

- Uvicorn - a fast ASGI server based on uvloop and httptools

- Hypercorn - an ASGI server based on the sans-io hyper, h11, h2, and wsproto libraries

It is important to reiterate that internally Django is still processing requests synchronously in a threadpool. But the underlying ASGI server would be handling requests asynchronously.

This means your existing Django projects require no changes. Think of this change as merely a new interface by which HTTP requests can enter your Django application.

But this is a significant first step in transforming Django from “outside-in”. You could also start using Django on the ASGI server which is usually faster.

How to use ASGI?

Every Django project (since version 1.4) ships with a wsgi.py file, which is a WSGI handler module. While deploying to production, you will point your WSGI server like gunicorn to this file. For instance, you might have seen this line in your Docker compose file

command: gunicorn mysite.wsgi:application

If you create a new Django project (for e.g. created by running the django-admin startproject command) then you will find a brand new file asgi.py alongside wsgi.py. You will need to point your ASGI server (like daphene) to this ASGI handler file. For example, the above line would be changed to:

command: daphene mysite.asgi:application

Note that this requires the presence of an asgi.py file.

Running Existing Django Projects under ASGI

None of the projects created before Django 3.0 have an asgi.py. So how do you go about creating one? It is quite easy.

Here is a side-by-side comparison (docstrings and comments omitted) of the wsgi.py and asgi.py for a Django project:

| WSGI | ASGI |

|---|---|

import os |

import os |

If you are squinting too hard to find the differences, let me help you - everywhere ‘wsgi’ is replaced by ‘asgi’. Yes, it is as straightforward as taking your existing wsgi.py and running a string replacement s/wsgi/asgi/g.

Gotchas

You should take care not to call any sync code in the ASGI Handler in your asgi.py. For example, if you make a call to some web API within your ASGI handler for some reason, then it must be an asyncio callable.

ASGI vs WSGI Performance

I did a very simple performance test trying out the Django polls project in ASGI and WSGI configurations. Like all performance tests, you should take my results with liberal doses of salt. My Docker setup includes Nginx and Postgresql. The actual load testing was done using the versatile Locust tool.

The test case was opening a poll form in the Django polls application and submitting a random vote. It makes n requests per second when there are n users. The wait time is between 1 and 2 seconds.

The results shown below indicate around 50% increase in the number of simultaneous users when running in ASGI mode compared to WSGI mode.

| Users | 100 | 200 | 300 | 400 | 500 | 600 | 700 |

|---|---|---|---|---|---|---|---|

| WSGI Failures | 0% | 0% | 0% | 5% | 12% | 35% | 50% |

| ASGI Failures | 0% | 0% | 0% | 0% | 0% | 15% | 20% |

As the number of simultaneous request ramp up the WSGI or ASGI handler will not be able to cope up beyond a certain point resulting in errors or failures. The requests per second after the WSGI failures start varies wildly. The ASGI performance is much more stable even after failures.

As the table shows, the number of simultaneous users is around 300 for WSGI and 500 for ASGI on my machine. This is about 66% increase in the number of users the servers can handle without error. Your mileage might vary.

Frequent Questions

I did a talk about ASGI and Django at BangPypers recently and there were a lot of interesting questions the audiences raised (even after the event). So I thought I’ll address them here (in no particular order):

Q. Is Django Async the same as Channels?

Channels was created to support asynchronous protocols like Websockets and long polling HTTP. Django applications still run synchronously. Channels is an official Django project but not part of core Django.

Django Async project will support writing Django applications with asynchronous code in addition to synchronous code. Async is a part of Django core.

Both were led by Andrew Goodwin.

These are independent projects in most cases. You can have a project that uses either or both. For example if you need to support a chat application over web sockets, then you can use Channels without using Django’s ASGI interface. On the other hand if you want to make an async function in a Django view, then you will have to wait for Django’s Async support for views.

Q. Any new dependencies in Django 3.0?

Installing just Django 3.0 will install the following into your environment:

$ pip freeze

asgiref==3.2.3

Django==3.0.2

pytz==2019.3

sqlparse==0.3.0

The asgiref library is a new dependency. It contains sync-to-async and async-to-sync function wrappers so that you can call sync code from async and vice versa. It also contains a StatelessServer and a WSGI-to-ASGI adapter.

Q. Will upgrading to Django 3.0 break my project?

Version 3.0 might sound like a big change from its previous version Django 2.2. But that is slightly misleading. Django project does not follow semantic version exactly (where a major version number change may break the API) and the differences are explained in the Release Process page.

You will notice very few serious backward incompatible changes in the Django 3.0 release notes. If your project does not use any of them, then you can upgrade without any modifications.

Then why did the version number jump from 2.2 to 3.0? This is explained in the release cadence section:

Starting with Django 2.0, version numbers will use a loose form of semantic versioning such that each version following an LTS will bump to the next “dot zero” version. For example: 2.0, 2.1, 2.2 (LTS), 3.0, 3.1, 3.2 (LTS), etc.

Since the last release Django 2.2 was long-term support (LTS) release, the following release had to increase the major version number to 3.0. That’s pretty much it!

Q. Can I continue to use WSGI?

Yes. Asynchronous programming could be seen as an entirely optional way to write code in Django. The familiar synchronous way of using Django would continue to work and be supported.

Andrew writes:

Even if there is a fully asynchronous path through the handler, WSGI compatibility has to also be maintained; in order to do this, the WSGIHandler will coexist alongside a new ASGIHandler, and run the system inside a one-off eventloop - keeping it synchronous externally, and asynchronous internally.

This will allow async views to do multiple asynchronous requests and launch short-lived coroutines even inside of WSGI, if you choose to run that way. If you choose to run under ASGI, however, you will then also get the benefits of requests not blocking each other and using less threads

Q. When can I write async code in Django?

As explained earlier in the Async Project roadmap, it is expected that Django 3.1 will introduce async views which will support writing asynchronous code like:

async def view(request):

await asyncio.sleep(0.5)

return HttpResponse("Hello, async world!")

You are free to mix async and sync views, middleware, and tests as much as you want; Django will ensure that you always end up with the right execution context. Sounds pretty awesome, right?

At the time of writing, this the patch is almost certain to land in 3.1 now, awaiting a final review.

Wrapping Up

We covered a lot of background about what led to Django supporting asynchronous capabilities. We tried to understand ASGI and how it compares to WSGI. We also found some performance improvements in terms of increased number of simultaneous requests in ASGI mode. A number of frequently asked questions related to Django’s support of ASGI were also addressed.

I believe asynchronous support in Django could be a game changer. It will be one of the first large Python web frameworks to evolve into handling asynchronous requests. It is admirable that it is done with a lot of care not to break backward compatibility.

I usually do not make my tech predictions public (frankly many of them have been proven right over the years). So here goes my tech prediction — Most Django deployments will use async functionality in five years.

That should be enough motivation to check it out!

Thanks to Andrew Goodwin for his comments on an early draft. All illustrations courtesy of Old Book Illustrations