If you could design a new programming language, what would it be like? A question I had ever since I took the Programming Languages (PL) course in the third year of my Computer Science Engineering way back in 2001. For the first time, I have an answer – Punchscript.

Punchscript is a programming language made up of punch dialogues by the Indian moviestar Rajinikanth. Punch dialogues are more punchlines than dialogues, delivered in Rajini’s inimitable style in the form of an aphorism or a retort.

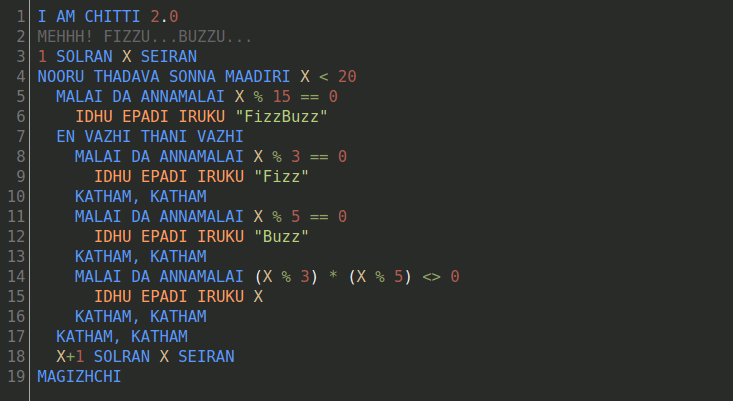

Here is how the customary Fizz Buzz looks like in Punchscript:

Punchscript works with only signed integer datatype. So instead of boolean logic operators, you’ll need to use integer arithmetic logic. Also, it supports only IF… ELSE rather than the IF… ELSEIF… compound statements. Notice how these limitations are worked around in the code above.

You can try Punchscript in your browser and run various examples. Despite its limitations, you can write all kinds of non-trivial algorithms. It is Turing Complete, like most languages. So any computation can be performed it it. But I would not recommend betting your next startup on it.

Chasing the Camel

Remember my PL course of 2001? Among the half a dozen languages we read about, one language stood out as both elegant and practical. That was - ML (ML stood for Meta Language long before some smart guy recently called it Machine Learning). We learnt Standard ML at that time but even then OCaml was much more popular.

Then, year after year, nearly every ICFP functional programming contest had OCaml mentioned by one of the top three winners (the trend changed in recent years). The syntax seemed easy enough and I could pick it up in a few days. After a while, I would completely forget about it, get interested in ML again and have to re-learn the whole thing. I have tracked this sisyphean task of learning OCaml in a decade old journal on Google Docs.

Eventually, I realized I need to do something significant in OCaml. Now which programming problem would be of a moderate size and can be ideally solved in OCaml? The obvious answer was a Compiler (with Ray Tracer being a distant second). The language is excellent at manipulating algebraic data types which is perfect for abstract syntax trees. Even more, the js_to_ocaml tool helps you convert the implementation to Javascript, so that your compiler can run in the browser!

Now comes the question of which language to implement.

Why this Kolavari?

It is way more fun to design your own programming language than to implement an existing one. You start by thinking what new syntax you can come up with.

I thought - syntactic elements should be short and memorable like… punchlines. A bit of a stretch but sounded like a fun project. Rajnikanth movies seemed to be a goldmine of punch dialogues, with most movies having, at least, one.

It took a lot of binge watching research to find the best phrase for a loop or condition statement. I learnt Tamil by the ear so it is not perfect. But I must say that inventing a new language from scratch is both demanding and rewarding.

The hardest part is to select the least amount of syntax while still being useful. It is really tempting to assemble all the favourite features from other languages, but then your project would never get shipped. And as we know, shipping is the most important feature.

The actual implementation took me 4 to 6 weeks (working mostly weekends including combing through user manuals of OCaml tools). Along the way, there were so many decisions to make, like:

- Whitespace significance

- Data structures

- Compiled or Interpreted

- Functions or procedures

- Virtual Machine or direct AST execution

Funnily enough, I often picked the the third choice of YAGNI. If we can live without a feature, leave it. This not only made the implementation easier but the language more elegant.

There are so many way to set up an OCaml development environment. I’ve setup up all the modern OCaml tools needed in a Docker container. Keeping everything in Docker makes it easier to manage and reproduce.

Opening the Toolbox

The best instructions for setting up a modern OCaml development environment can be found at the Real World OCaml book’s site. The first step is to install Opam – OCaml’s package manager plus isolated environment manager (sort of like Python’s pipenv).

I started by creating a Docker file based on the opam:alpine_ocaml image. You will need to reproduce the installation commands in the Dockerfile. The only change I made was installing using opam depext instead of opam install, so that external dependencies are installed.

Next, we need to install OCaml’s compiler building tools - OCamlLex and Menhir. There is an excellent chapter on Parsing in the Real World OCaml book which is a good introduction to using these tools. If you are familiar with YACC and LEX from other programming languages like C, it should be like meeting an old friend.

Then I went ahead and installed Emacs as well. This is because Emacs needs to call OCaml for its OCaml mode tuareg to work properly. I found this to be extremely convenient to have a self-contained development environment although Docker purists might prefer it to be separate.

Make sure you are using Dune (previously called JBuilder) for building the project. You will probably still need a Makefile. Most of Punchscript is organized in a library, which can be built into bytecode or Javascript targets.

Read Issu’s recent post on OCaml Best Practices, if you are interested in an overview of modern OCaml development tools.

Learning the Mystical Arts

Setting up everything right might take a while, but that’s only the beginning. There is a ton of resources to writing a compiler or learning OCaml. But hardly any about making a compiler in OCaml.

Here are some books and articles which really helped me:

- If you are novice then I would recommend reading OCaml from the Very Beginning.

- If you are already familiar with OCaml (like I was), then I would recommend reading the OCaml User Manual, available in many formats for offline reading (I wish they had an EPUB too for Kindle readers).

- If you want to learn or have forgotten compiler theory (what was LALR again?), then you can read Modern Compiler Implementation in ML, by Andrew W. Appel. Just Chapter 3 is enough to brush up on parser.

- You ought to read the Mehir manual. The examples are extremely helpful.

- The TOSS tutorial is great at explaining the entire toolchain.

So you will need to read a lot of documentation to understand most OCaml tools. I know some get daunted by long manuals, but they are quite approachable. You just have to read some introductory sections and you should be good to go.

Wiring up a Live Demo

The fun part of the project was building a live demo for the web. The js_of_ocaml tool converts OCaml bytecodes into compact Javascript code. It was surprisingly small. The entire Punchscript interpreter is a single file weighing just 29K gzipped (132K minified)!

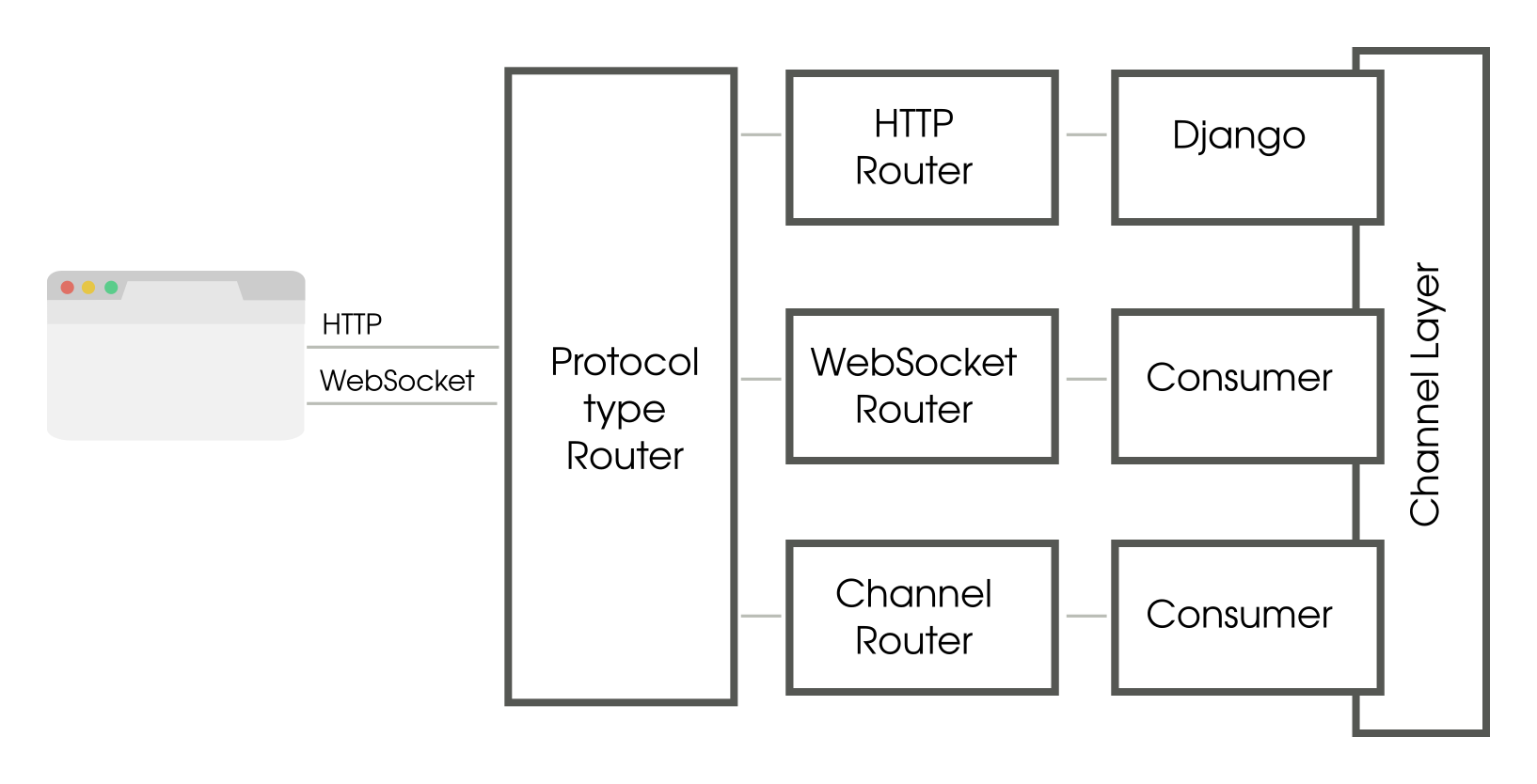

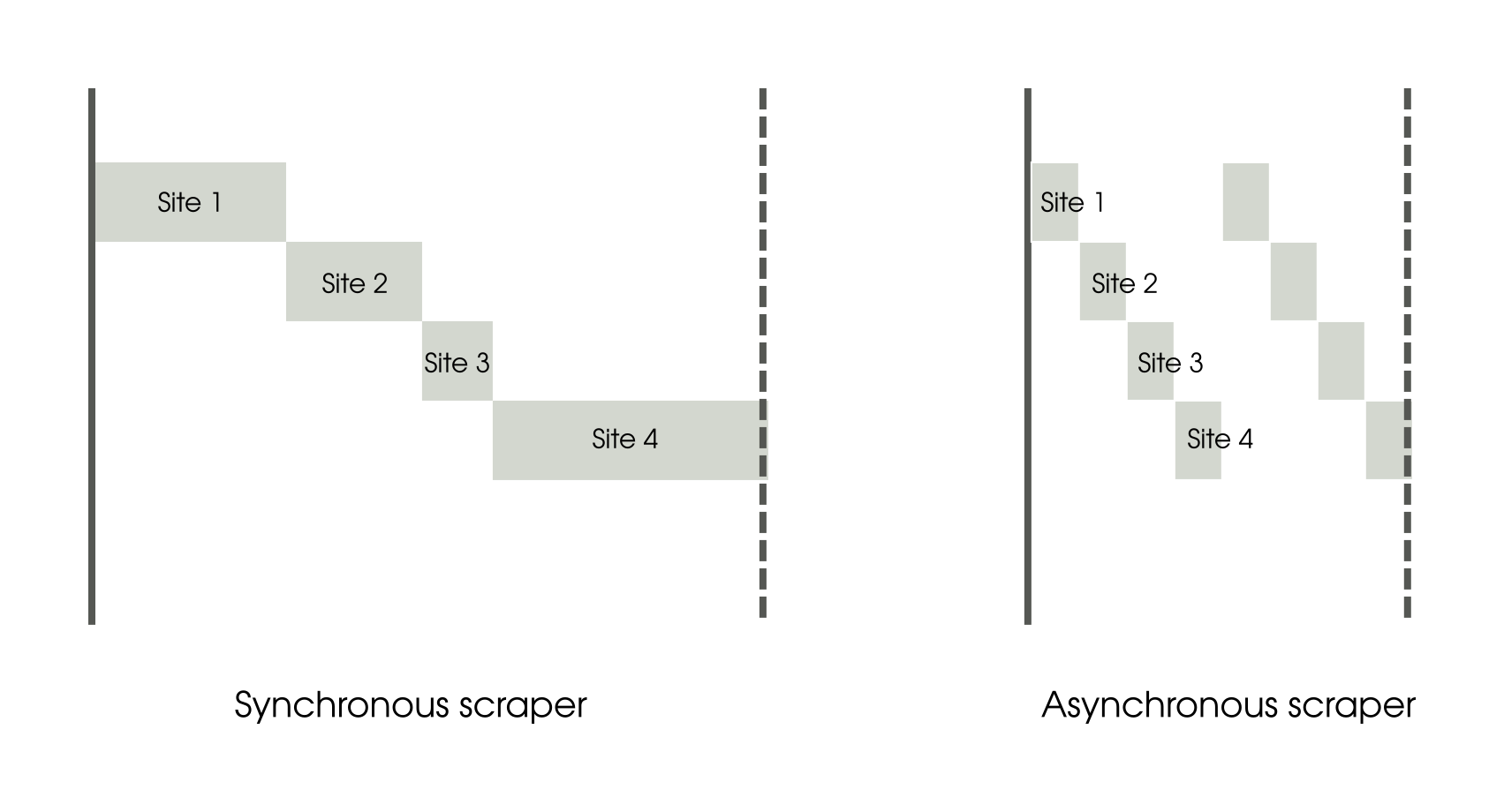

The interpreter is invoked from the page using Web workers. There is something magical about watching a web page interact with your OCaml program synthesized from an almost sterile world of functional programming. Web workers makes the whole interaction asynchronous. No browser hangups while your code is running.

I always wanted the examples to have proper syntax highlighting in Punchscript. I used CodeMirror to build the code editor. Writing a custom syntax highlighter seemed to need a lot of Javascript code. So I heavily derived from the Python syntax highlighting mode.

Taking it Further

Punchscript is both a language and an implementation. Both have potential to grow. Language specs are public and new punch dialogues are welcome. You are also free to create your own implementation in your favourite language. I would be happy to link them.

Have fun coding with punch dialogues!

Thanks to Deepak and Ramakrishnan for reviewing the early drafts of the language specs.